I have a database migrated from MySQL to PostgreSQL (I had my good reasons but this post is not about that). In MySQL because of MVCC behaviour it makes sense and it’s actually a recommendation to split frequently updated columns from large tables especially if using a web framework like Django which always updates the full row so even if MySQL stores the TEXT column off-page (look at InnoDB Blob storage for more details) the TEXT columns as well will be read from the separate extent and written to the Doublewrite buffer and to the Undo space (and binary logs if it’s set). To overcome this situation it’s beneficial to have the counters in a separate table.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

CREATE TABLE post ( id int unsigned PRIMARY KEY AUTO_INCREMENT, title varchar(255), body text ) Engine=InnoDB; CREATE TABLE post_counters ( post_id int unsigned PRIMARY KEY, read int unsigned not null default 0, commented int unsigned not null default 0, CONSTRAINT `post_id` FOREIGN KEY (`post_id`) REFERENCES `post` (`id`) ) Engine=InnoDB; |

or alternatively if we want to sort on popularity for example we want those metrics in the main table so we can split the blob to it’s separate table. It has it’s own benefit of being able to list all the posts with comment and read counters by using one table only. And we join the details table when someone read an individual post.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

create table post ( id int unsigned PRIMARY KEY AUTO_INCREMENT, title varchar(255), read int unsigned not null default 0, commented int unsigned not null default 0, ) Engine=InnoDB; create table post_details ( post_id int unsigned PRIMARY KEY, body text CONSTRAINT `post` FOREIGN KEY (`post_d`) REFERENCES `post` (`id`) ) Engine=InnoDB |

Having said all these I was wondering if the same thing is true for PostgreSQL. So I have setup a benchmark.

I’ve been running the test with over 1000 transactions / sec with 3 different ways:

- Normal design where everything is in one table

- Split counters into their own table

- Alternate design where counters are in the main table and blobs are separate

I used the open source shakespeare database for that. Every query was run against the DB 100 times to have a large enough sample.

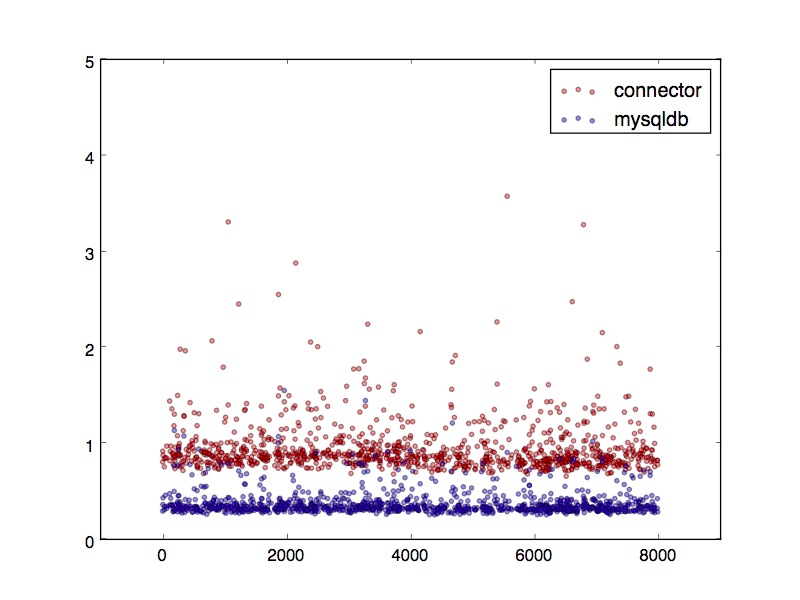

The results are in milliseconds.

Updating the counter column only

The queries

|

1 2 3 |

update paragraph_couters set read_counter = read_counter + 1 where p_id =?; update paragraph_alternate set read_counter = read_counter + 1 where paragraphid = ?; update paragraph set read_counter = read_counter + 1 where paragraphid = ?; |

Results (update times)

Although the average query time for split was the lowest but taking the standard deviation into account you can see there are no significant difference between the different designs.

| Avg | StdDev | Min | Max | 95% lower | 95% upper | |

| Normal | 0,658 | 0,160 | 0,459 | 1,886 | 0,345 | 0,970 |

| Split | 0,613 | 0,121 | 0,444 | 1,200 | 0,377 | 0,850 |

| Alternate | 0,675 | 0,144 | 0,479 | 1,551 | 0,393 | 0,956 |

Updating the full row

What if we mimic what Django would do and update the full row and not just the counters.

Results (update times)

| Avg | StdDev | Min | Max | 95% Lower | 95% Upper | |

| Normal | 0,860 | 0,181 | 0,606 | 1,695 | 0,504 | 1,215 |

| Split | 0,645 | 0,164 | 0,458 | 1,697 | 0,324 | 0,966 |

| Alternate | 0,748 | 0,137 | 0,541 | 1,477 | 0,480 | 1,017 |

Here you definitely can notice a slight difference between the split design and the other two. The split design yields 25% better average performance comparing to the large table. But the confidence intervals shows that it is still statistically irrelevant. Although it’s unlikely but possible that the normal is actually the same performant as the split design.

The alternate design gives roughly 13% better average performance comparing to the large table. The same also has to be noted as above. Statistically still irrelevant.

Select performance

Obviously it has it’s own penalty for your selects if you need to join two tables instead of querying one. So let’s see an example where I fetch the top 10 most read posts.

After creating indexes on the read_counter column on every table.

Queries:

|

1 2 3 4 5 |

select * from paragraph order by read_counter desc limit 10; select * from paragraph_alternate order by read_counter desc limit 10; select * from paragraph join paragraph_couters c on p_id = paragraphid order by c.read_counter desc limit 10; |

Please note that using the alternative design I didn’t need to join my details table unless I want the full body of the post.

Results (query times)

| Avg | StdDev | Min | Max | 95% Lower | 95% Upper | |

| Normal | 1,011 | 0,216 | 0,862 | 3,044 | 0,587 | 1,435 |

| Split | 1,536 | 0,109 | 1,405 | 2,380 | 1,321 | 1,751 |

| Alternate | 0,890 | 0,083 | 0,766 | 1,499 | 0,728 | 1,052 |

With the split design the query time increased significantly for the split design by almost 52% (this is statistically relevant as well) while the alternative design even improved the performance by 12% (this is statistically not relevant though).

Disclaimer

You can pretty much achieve the same query time with limiting the columns in your select with the normal large table design. I intended to mimic a web framework’s behaviour so I haven’t done that but that’s an absolutely viable option too.

Conclusion

PostgreSQL handles the large blobs quite well so unless you have updates on your tables in the order of magnitude of 1000 or more per seconds you won’t see a significant benefit of splitting your tables based on the update frequency but you’re going to lose some on the retrieval.

If you like to squeeze the last drop of performance out of your system and don’t mind to pay the complexity price try the alternatively split design which boosts both the read and the write performance.

Taking the normal (large table) design as the baseline here are the statistics about what each improves.

| Normal | Split | Alternative | |

| Update | 100% | 75% | 88% |

| Select | 100% | 152% | 87% |

Only bold numbers are statistically relevant.

Recent comments